Becoming the Screw

Chapter 20: Extending ourselves into our tools and creations

Dear friend,

As AI continues to prove it can do many jobs better than humans, we are coping with being downgraded from being highly-skilled pilots to being auto-pilot babysitters. In this week’s chapter of User Zero I talk about planes falling from the sky. I’m going to talk about our under-used ability to extend our minds beyond our bodies and into the machines that we use. If I were writing this today I would probably contrast it with the inverse idea, the situation where our machines are extending themselves inside our minds, replacing original thoughts with algorithmically optimized filler. It makes you wonder who’s flying our mental airships, doesn’t it? Stay creative.

Your friend,

Ade

“What usually happens in the educational process is that the faculties are dulled, overloaded, stuffed and paralyzed so that by the time most people are mature they have lost their innate capabilities.” —Buckminster Fuller

When a plane crashes we look for someone to blame. We always find someone and as long as the reason can fit in a headline or tweet, the excuses rarely receive scrutiny. Whether real or invented, these excuses typically satisfy the bloodlust from post-accident public outcry. If human error is to blame the scapegoat is lack of training. If technology is implicated the blame usually gets directed at corporate greed. If systems fail we blame bureaucracy. Even though at the center of most tragedies you will find a human interacting with a machine, bad design rarely gets mentioned.

Unlisted in the five senses is our capacity to live inside inanimate objects. When you are driving, you are not simply pushing on pedals and spinning a wheel. No, you feel the entire car as if it is an extension of your entire body. You have a tactile sensation of how the wheels are gripping the ground. You feel the borders of the vehicle as you maneuver tight spaces. You monitor the engine, your perceptions tuned to any variations that might signal a problem. In a sense you are a cyborg, the car becomes an extension of your body.

We become the objects we use. We don’t just twist a wrench, we become the bolt in the grip of its jaws. We feel the metal bend to our pressure until the threads release. When you are working on a screw, you inhabit this tiny world, exploring the inner surfaces that hold the system together.

When you grasped a pencil in your fist for the first time, you were building a mental map of that tool that would serve you for the rest of your life. At first your lines were dark and jagged. As you gained control of the tool, the line smoothed and softened as the shapes of your name become increasingly more legible. For those who stick with it, the pencil evolves from a line-making tool into something that produces infinite shades of gray until the marks are indistinguishable from photographs.

In sports, the best players are said to have touch. A tennis player feels the strings of the racket, the weight of the ball, the height of the net, the edges of the court. At their best, they are so in tune with this world, that they appear to have pre-cognition, it is as if the game is bending to their will. Instead of reacting to the game, the game seems to be reacting to them.

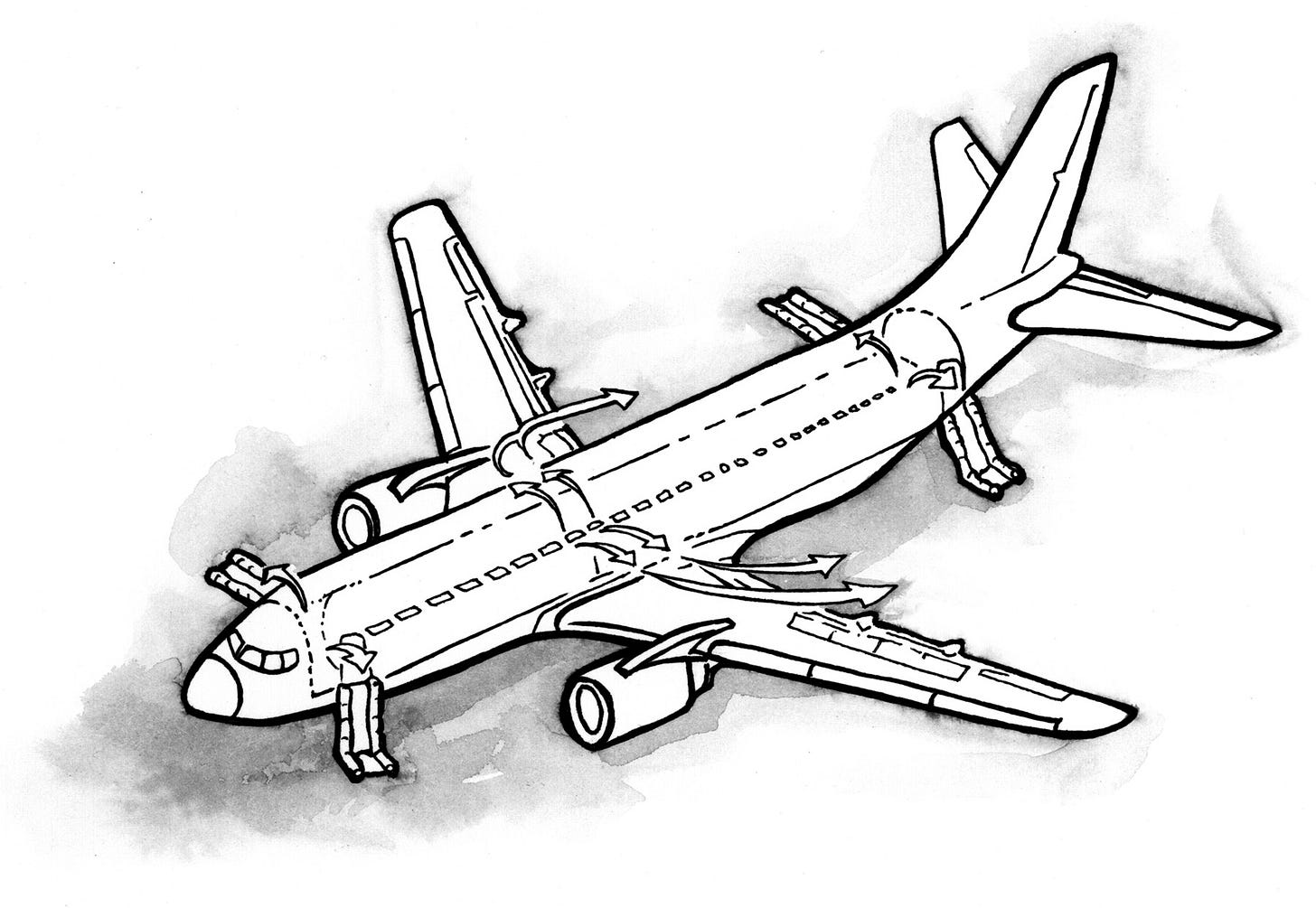

Pilots have a term called airmanship. It encompasses many factors of flight, but perhaps most importantly is the ability of the pilot to feel the plane. Just as a mechanic inhabits the world at the tips of his wrenches, the best pilots extends themselves into the wings as if they were strapping the machine on their shoulders. When all the engines of your plane go out, autopilot won’t save you. Your best hope is that the pilot with the plane strapped to their back will save themselves. You want somebody like Chesley “Sully” Sullenberger at the controls to bring the plane down safely in the Hudson River.

And yet, the incredible safety record of airplanes isn’t because they are flown by pilots like Sully, but because the nuance of flight has been automated out of the system by autopilot and error-proof protocol. Human error has been removed from the system every time it has been detected. Aside from minimal intervention on takeoff and landing, today’s airplanes essentially fly themselves.

How do you train the 300,000 people required to operate the world’s commercial airplanes? Should you demand that every plane be helmed by military-grade pilots like Sully or do you trust the autopilot and train pilots to fill in the tiny gaps where autopilot is worse than humans? The decision always falls in favor of autopilot and we are safer because of it. Until we aren’t.

What gets lost in the debate of man vs. autopilot is that no matter where you draw the line, there are design decisions that need to be made at the point of contact between the human and the machine. This results in two different approaches to airplane design as represented by Airbus and Boeing. If you believe in the machine, you error on the side of letting the plane make the critical decisions. This is the philosophy of Airbus. Conversely, if you trust the computer-beating heroics of pilots in emergency situations, you make different design decisions, as Boeing does.

Airbus makes planes that are easier to fly because they are invested in computer guidance. Boeing planes are slightly harder to fly, but they allow the pilot to intervene should the computer systems fail.

To be fair, both approaches have lead to near-identical safety records because Airbus and Boeing airplanes are both heavily autopilot driven. The subtly different design philosophies result in an extremely small surface where life-and-death decisions are either decided by a human or a robot. Given the volume of flights, however, this exposure will eventually manifest itself as we will see soon. But first we should ask ourselves a question. Which type of plane would you rather fly?

Many people might select the autopilot approach of the Airbus and the easier paycheck, although I bet most of us would rather fly the Boeing plane that leaves a tiny window for heroic intervention. When the situation arises, we like to believe that we could grab the controls and guide our ship to safety. We hesitate to surrender our sense of feel, to transfer the controls to a computer. We would rather feel the tool as an extension of our bodies so that we can affect the path of the plunging craft.

Lion Air flight 610 crashed on October 29, 2018, followed four months later by Ethiopian Airlines Flight 302. These tragedies lead to the grounding of the entire fleet of Boeing 737 Max airplanes. In the moments before these planes crashed, a battle was taking place in the cockpits. It wasn’t a fight between the pilots, but rather a battle between man and autopilot.

At the heart of the accidents is an obscure mechanism called the Maneuvering Characteristics Augmentation System (MCAS) which is unique to the 737 Max. All you need to know about the MCAS is that it is an automatic feature that kicks in when certain rare conditions arise in flight. The conditions are so rare, and the effect is so simple to recover from that it was decided that they didn’t need to be described in the 737 Max manuals and no special training was necessary.

Before you blame this decision, remember that a self-flying airplane is an extremely complex machine. The safety record of planes has come from this type of decision. You save lives by removing pilot cognitive load. In the rare case when the MCAS engaged, the plane would dip (something every pilot experiences regularly). Rather than explain a new complexity to the pilots, the designers of the MCAS made a gamble. Instead of burdening the pilots with technical specifications about an automated feature that most pilots would never experience, they relied on the pilots existing knowledge. Should the MCAS engage, every pilot should recognize the feeling of the plane and either manually correct the imbalance or flip a switch and let the computer do it.

At the risk of extreme over-simplification, here is what happened on flight 610 in the moments prior to the crash. The plane took off with a faulty sensor that contributed to a situation where the MCAS wrongly engaged. As a result, the pilot felt the plane dive and reacted to counteract the unexpected force. Had the pilot simply flipped a switch allowing the computer to correct the imbalance (something routine to every pilot), the plane would have recovered. Instead, he flew by feel, manually countering the phantom forces caused by the MCAS. In essence, the human and the autopilot were battling for control of the plane. In the last seconds, the pilot gave the control to his co-pilot, severing his tenuous connection between man and machine. The second pilot, lacking the feel of the plane, couldn't maintain the battle and the plane crashed.

The fate of flight Ethiopian Airlines Flight 302 four months later was slightly different. The same sensor that failed in flight 610 malfunctioned on flight 302. These pilots and the entire fleet, having been retroactively briefed on the MCAS, knew that when they felt the plane dive as a result of the MCAS they simply needed to flip the switch that allowed the computers to correct the imbalance. So when the 302’s nose dipped, that’s what they did. Although they passed the first obstacle, in the confusion of the malfunctioning sensor the throttle hadn’t been reduced after takeoff. This caused the plane’s speed to exceed the limits that automatic correction could engage. This forced the pilots to manually compensate for the plane’s dipping. The pilot believed that if he could engage autopilot, the plane would correct itself. Unfortunately, auto-pilot can’t be engaged when you are pulling on the controls. So he stopped pulling on the stick and released the column, sending the plane into a dive. Just as the autopilot engaged, the MCAS activated again, doubling the angle of the already descending plane. The autopilot was not able to recover and the plane hit the ground with the throttle still in take-off position.

Again, my descriptions of these accidents are over-simplified. One of the lessons of this book is to be skeptical of know-it-alls and I am trying to not fall into that trap. I encourage you to seek out the complete details of the accidents if you want to fully understand the MCAS and the subtle chain of events that caused the tragedies.

While the MCAS receive the bulk of the scrutiny and blame in the analysis of flights 610 and 302, there is a contradiction that seems to be hiding in plain view. One plane crashed because a pilot refused to relinquish control to the autopilot. The other plane crashed because the pilot was unable to transfer control to the autopilot.

The question isn’t whether or not autopilot is better than humans. The challenge is how do we handle the overlapping space, the gray area where human intention is opposed by the technology that we have hired.

If you decide tomorrow to become a pilot, you can expect to spend thousands of hours, if not your entire career without ever encountering a situation where your abilities come into conflict with the plane’s autopilot. In the rare instance when a human decision can prevent a plane from falling from the sky, the life-saving knowledge should reside in the skull of the pilot, in the muscle memory of his hands, in his ability to feel the plane as if it were an extension of his body. Otherwise we can’t understand why the tragedy happens. Instead the knowledge is locked in a meeting room in the bowels of a corporate office, a bullet point on a slide deck, a decision by a committee of analysts who are themselves slaves to the output of computers that have automated their decision making. This ignorance gets passed along to the analysis of the accident. Soon an entire fleet of planes is grounded for reasons nobody understands. We are left to cling to unprovable allegations of incompetence, corruption, and cover-ups.

Technology has proven it can protect us from ourselves. When the risk of human error is high we can automate the system to remove the messy human factor out of the equation. The tradeoff of this gamble is that it requires us to sever ourselves from our machines. Autopilot limits the distance that we can project our intelligence into machines. Instead of being masters of our tools, we become dumb users poking at screens and guessing what the outcome will be.

The human ability to extend ourselves into our tools is not limited to physical objects. Anyone who has become immersed in a video game has felt themselves extend into the digital world. When you are in this state, the worst possible technical shortcoming is lag, the unwanted delay between your actions and their effect in the digital world. This breaks the illusion, severs the perception of feeling in control. A modern cockpit has more in common with a digital game than it does with the physical controls of the first airplanes. There was a time when a pilot’s hand could pull on a stick that was still physically connected to the plane’s control surfaces. Much of our tools, although they feel real, are actually well-crafted illusions. The airplane is completely automated, the controls in the hands of the pilots aren’t manually controlling the rudders. They are simulating the feeling that maps to the pilot’s perceptions that they are in control.

We want our planes to be flown by heroes, not robots. We want to pilot our careers, not be custodians of impenetrable technology. Our ancestors were masters of hand-made tools. We are obsoleted by our tools, barely needed unless our machine masters require us to replace their batteries. The endgame is a reality completely disconnected from our inputs, a situation where every action we take feels self-motivated but is actually orchestrated by artificial intelligence so advanced that we can’t even feel the puppet masters pulling our strings.

User zero requires visibility into the systems we want to change. Our ability to extend our beings into our tools is how we will turn the screw. This can’t be done by remote control, no we must become the screw through an unbroken connection to our tools. If we release our hands from the controls, the plane will nosedive. In the next chapter we will look at the most misused tool humanity has ever invented, the ubiquitous Powerpoint presentation.